Data Management with Databricks: Big Data with Delta Lakes

University/Institute: Coursera Project Network

Description

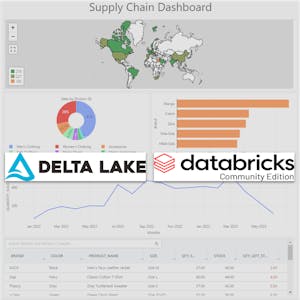

In this 2-hour guided project, "Data Management with Databricks: Big Data with Delta Lakes" you will collaborate with the instructor to achieve the following objectives: 1-Create Delta Tables in Databricks and write data to them. Gain hands-on experience in setting up and managing Delta Tables, a powerful data storage format optimized for performance and reliability. 2-Transform a Delta table using Python and leverage SQL to query the data for creating a comprehensive dashboard. Learn how to apply Python-based transformations to Delta Tables, and use SQL queries to extract the necessary insights for building a Supply Chain dashboard. 3-Utilize Delta Lake's merge operation and version control capabilities to efficiently update Delta Tables. Explore the capabilities of Delta Lake's merge operation to perform upserts and other data updates efficiently. Additionally, learn how to leverage Delta Lake's built-in version control to track and access previous versions of Delta Tables as needed. Throughout a real-world business scenario, you will use Databricks to build an end-to-end data pipeline that integrates various JSON data files and applies transformations, ultimately providing valuable insights and analysis-ready data. This intermediate-level guided project is designed for data engineers who build data pipelines for their companies using Databricks. In order to be successful in this guided project, you need prior knowledge of writing Python scripts including importing libraries, setting-up variables, manipulating data frames, and using functions. You will also need to be familiar with writing SQL queries such as aggregating, filtering, and joining tables.