Description

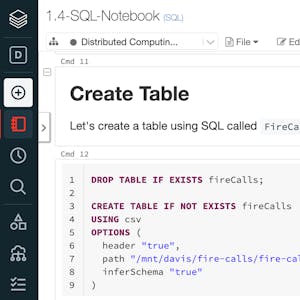

This course is all about big data. It’s for students with SQL experience that want to take the next step on their data journey by learning distributed computing using Apache Spark. Students will gain a thorough understanding of this open-source standard for working with large datasets. Students will gain an understanding of the fundamentals of data analysis using SQL on Spark, setting the foundation for how to combine data with advanced analytics at scale and in production environments. The four modules build on one another and by the end of the course you will understand: the Spark architecture, queries within Spark, common ways to optimize Spark SQL, and how to build reliable data pipelines. The first module introduces Spark and the Databricks environment including how Spark distributes computation and Spark SQL. Module 2 covers the core concepts of Spark such as storage vs. compute, caching, partitions, and troubleshooting performance issues via the Spark UI. It also covers new features in Apache Spark 3.x such as Adaptive Query Execution. The third module focuses on Engineering Data Pipelines including connecting to databases, schemas and data types, file formats, and writing reliable data. The final module covers data lakes, data warehouses, and lakehouses. Students build production grade data pipelines by combining Spark with the open-source project Delta Lake. By the end of this course, students will hone their SQL and distributed computing skills to become more adept at advanced analysis and to set the stage for transitioning to more advanced analytics as Data Scientists.